The "Mechanical Curator" is an experiment, providing undirected engagement with the British Library's digital content. Undirected?

Random, fortuitous, haphazard, undirected, unplanned, and most importantly, unpredictable. There are already many ways to discover great content that you know you like, but how do you find things that you cannot begin to describe?

The majority of researchers begin their search for content using a general purpose search engine (Ithaka S+R | Jisc | RLUK: UK Survey of Academics 2012 [PDF]). It is easy to forget just how phenomenally powerful these can be, leading researchers to content that they know they want. This is also its shortcoming. The normal mode of searching makes it very difficult to find things that are not known yet. Keyword searches do not make it easy to collide ideas and concepts together, and to view things from different perspectives and to see what might fit together.

While many of the major providers have made attempts to provide related content to search results, these fall short of being serendipitous. The idea of searching for content fails when the researcher does not even know what they might want to see or how to describe it in words. The Mechanical Curator approaches discovery from the opposite angle, publishing content as it sees fit without an outside agent directing what it should publish.

"I don't know art but I know it when I see it."

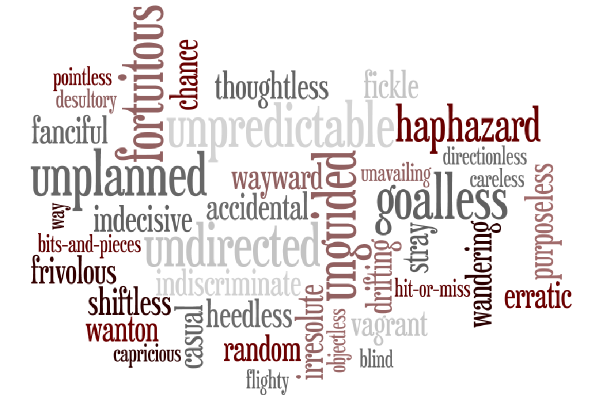

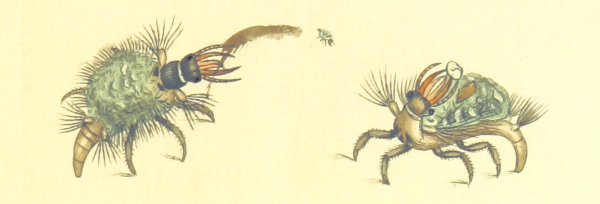

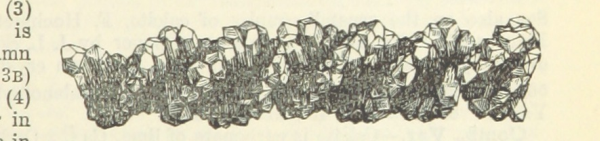

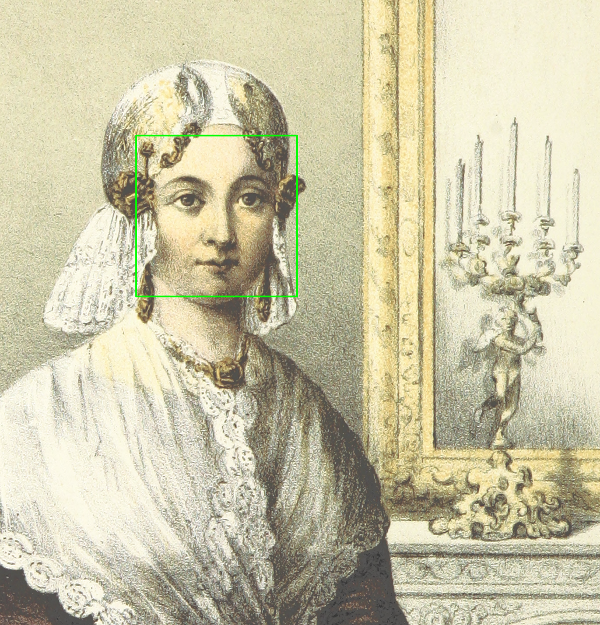

A small book illustration is chosen at random* from the pages of the digitised book collection and posted to a tumblr account with some description information about what book it was taken from, along with its entry in the library catalogue (insofar as this is currently possible.) The images are eclectic and seemingly unthemed, ranging from curious illustrations of animals to ornate, illuminated letters and from drawings of archaeological relics to complex crystal structures.

* - The selection process is not entirely random any more, but more on this development later.

Image from ‘The British Miscellany: or, coloured figures of new, rare, or little known animal subjects, etc. vol. I., vol. II’, 003450253 page 275 by SOWERBY, James.

Image from ‘A System of Mineralogy … Fifth edition, rewritten and enlarged … With three appendixes and corrections. (Appendix I., 1868-1872, by G. J. Brush. Appendix II., 1872-1875, and Appendix III., 1875-1882, by E. S. Dana.)’, 004117752

Image from ‘The Struggle of the Nations. Egypt, Syria, and Assyria … Edited by A. H. Sayce. Translated by M. L. McClure. With map … and … illustrations’, 002415000 page 696 by MASPERO, Gaston Camille Charles.

The Mechanical Curator. How? Why?

James Baker has written a post illuminating some of the feelings behind the Mechanical Curator so I have constrained myself to write about how I gathered the images from the books, why I did so in the first place, my explorations with the images and why I think it is more interesting that the Mechanical Curator selects images in an almost random fashion, rather than being completely random.

Gathering the images: Context

Microsoft ran a digitisation campaign to provide content for their 'Live Book Search' from around 2005 to 2008. They partnered with a number of libraries and provided the funds and teams to digitise their partner's content.

I have spent some time reorganising and exploring the 65,000 volumes they digitised from the British Library's collection. The years the works cover range from the 14th century right up to the 20th century, with the vast majority being published in the late 19th century. This also means that it has been straightforward to licence these works as being in the public domain, which is why the images are being released with an explicit CC0 licence.

The collection consists of:

- ~65,000 zipped archives of JPEG2000 image files, with a file per page. Images of the covers and flysheets are also often included,

- The same number of zipped archives of OCR metadata, encoding the words and letters recognised by the OCR process,

- Simple METS metadata for the original (physical) item, which was supplied to Microsoft by the British Library,

- Directories of the unpacked OCR XML and METS metadata, organised by the identifier of the work.

Exploring the pages

I was interested in mechanical ways of exploring the works - can I reuse existing techniques to detect faces to find out how the depiction of faces changes over time? How to hone in on pages with interesting content like maps, people and diagrams? (given that we cannot tell this from the metadata we have about these works.)

Most importantly, how can I do this with the limited compute power I have?

Guideline #1: "Make Effective Filters, and use them early and often"

There are several million pages in this collection, each one potentially containing something of interest. Not all pages will have illustrations on them however and processing them to detect faces would likely be a waste of time and effort. On inspection, the OCR XML occasionally contained information on areas where the OCR software believed it found images:

...

<ComposedBlock ID="P153_CB00001" HPOS="94" VPOS="22" WIDTH="833" HEIGHT="225" STYLEREFS="TXT_0 PAR_LEFT" TYPE="Illustration">

<GraphicalElement ID="P153_CB00001_SUB" HPOS="94" VPOS="22" WIDTH="833" HEIGHT="225"/>

</ComposedBlock>

...

As we cannot say if the OCR process missed any images, this isn't information enough to guarantee finding all of the illustrations. However, it was enough information to build a list of the 1.1 million pages which might be interesting to scan. So, did I write some code to take the XML, parse it, pull out the right nodes... no. This leads me onto my second guideline:

Guideline #2: "Simple tools are your friends. Learn to love grep, sed, cat, awk and *nix pipes."

In this case, it was clear that the sequence of characters "<GraphicalElement ID=" was going to appear in the XML only to indicate the location of an illustration on a page. Using grep and a little bash scripting, I was able to build a list of OCR XML files which was worth looking at in more detail.

[Example path to the OCR xml for ID: '000000206': "by_id/0000/000000206/ALTO"' (bold for emphasis.)]

$ for division in `ls by_id`

> do

> for id in `ls by_id/$division`

> do

> grep -l "GraphicalElement" by_id/$division/$id/ALTO/*.xml >> ~/illustrations.txt

> done

> done

Much of the code above is there to loop through all the XML files I needed to. There are much more concise (but less instructive!) ways to do so, and this way is hopefully clear. The bulk of the work is done by "grep -i" which causes it to print out the names of the files that contain the matching text. The '>>' pipes the output into the 'illustrations.txt' file, appending it to the end of whatever is in there already.

Guideline #3: Break down what you want to do into small sets of simple tasks, rather than trying to do it all at once*.

Instead of trying to create a big application th0at would automatically find, parse and act on the OCR XML in one go, I broke it into separate and straightforward tasks. Tasks like the simple one above that filtered out the OCR XML and created a list of pages that contained illustrations.

* This guideline stems from something I have learned from experience: You should avoid writing 'clever' code unnecessarily. You will revisit old code on occasion and you will be surprised at how quickly you forget all the clever tricks you used! Especially if you have changed programming languages and libraries since then too!

As you perhaps can see from the code, there are small scripts to create a queue of jobs from those pages, and other pieces of code that take those jobs and perform a single task on it. In this case, it was to create a jpeg image from any small (<~8in2) book illustration, as indicated by the OCR XML. The idea was to create a shareable collection of the small images such that they would fit on a USB flash drive. I now have a collection of 394,882 small illustrations, which occupy 41Gb of space. As 64Gb USB drives are not too expensive, I'd argue that I achieved that goal!

It was a straightforward piece of work to write a script that pushed a random image to a tumblr account, with a caption containing the small amount of metadata we had on it. This idea was driven by informal conversations the Digital Scholarship team had about how to make this collection of public domain works more accessible and remixable. The Mechanical Curator was born at that point and has posted a random image to tumblr every hour since that point.

Well, it used to post images it selected on a purely random basis. It used to.

Interests and Mood for the Mechanical Curator

The random images are, by their nature, potentially very interesting and often surfaced some interesting works that would have been otherwise ignored, due to the poor information we had on it, such as a single word title like 'London' or a more mundane description. With the Mechanical Curator, you don't know what is going to be posted next and it became clear that this inherent randomness was intriguing and somewhat addictive.

But what if the Mechanical Curator 'curated' its output in some way? What if it gained a very slight bias in what it posted?

I worked on some code that would allow the curator to assess how similar two images were - not just in terms of the the book's age, author and so on, but how similar the images were visually. This was written using OpenCV, and generates gauges of the content in ways that are described in the code as 'slantyness', 'bubblyness' or simply, size of the image. It adds its judgement to the posted image using tags, saying why it finds the images similar and whether or not it has detected a face or profile within the image and whereabouts it believes it is.

The Mechanical Curator now looks through a number of randomly selected images, and will post an image if it is similar enough to the one it most recently uploaded, both visually and by metadata similarities. However, I didn't want to tip the scales of randomness too much. If it cannot find a match after checking eight images, it gets bored and posts the eighth one as a '#new_train_of_thought'.

Due to its low boredom threshold, it regularly starts a new chain of thought and so doesn't get stuck in a loop of posting floral designs, cartoon line-art images, or of etchings!